If you know much about computers, or even if you don’t, chances are you’ve heard the term CPU. The CPU, which stands for Central Processing Unit, is an essential part of every system from your home laptop to the servers hosting your company’s website. But what is a CPU, and what role does it play in the infrastructure of your system? Are cores and vCPU the same? What about multithreading?

In this analysis, we’ll take a look at the functions of a CPU, discuss the roles and differences between physical cores and logical cores, and take a look at why the vCPU promised by the major cloud providers isn’t all it’s cracked up to be.

Looking for something specific? Use the table of contents below to jump to a specific section or read on to learn more!

What Is a CPU?

The Central Processing Unit, or Processor, is the brain of your computer. It’s responsible for interpreting instructions, delegating tasks, and performing calculations. When you tell your computer to do something, like load up a video, the instructions you relay through the mouse and keyboard are interpreted through the CPU and delegated out. In early computers, the CPU would have handled the execution of these tasks on its own. However, in modern hardware, the other components, such as the GPU, process certain actions themselves. In this way, modern CPUs have grown to hold a more supervisory role, handling fewer calculations directly, but still overseeing task completion.

Before the rise of smartphones and tablets, this communication process between you and your system’s CPU would have been handled using a series of Chipsets. Chipsets are integrated circuits which connect your computer’s CPU to RAM, storage, and any external attachments, such as your keyboard. Now though, as technology has continued shrinking, in order to facilitate smaller and more efficient CPUs, SOC (Systems on Chips) solutions have all-but-replaced chipsets. As a single-chip solution containing the CPU, GPU, Memory, and more, SOC offers a faster and more space conscious alternative to older, multi-chip solutions. With all these hardware and software components combined onto a single chip, the end result is a processor that performs faster and more reliably.

In the coming years though, with the continued growth of large, hyperscale data centers, the functionality of the CPU will soon diminish even further. In order to manage the sprawling infrastructure of these massive facilities and the hundreds of CPUs that combine to make them, the DPU (Data Processing Unit), will soon overtake the CPU as the true “Processor” in most large systems. For general purpose computing though, the CPU remains the primary means of instruction processing, at least for now.

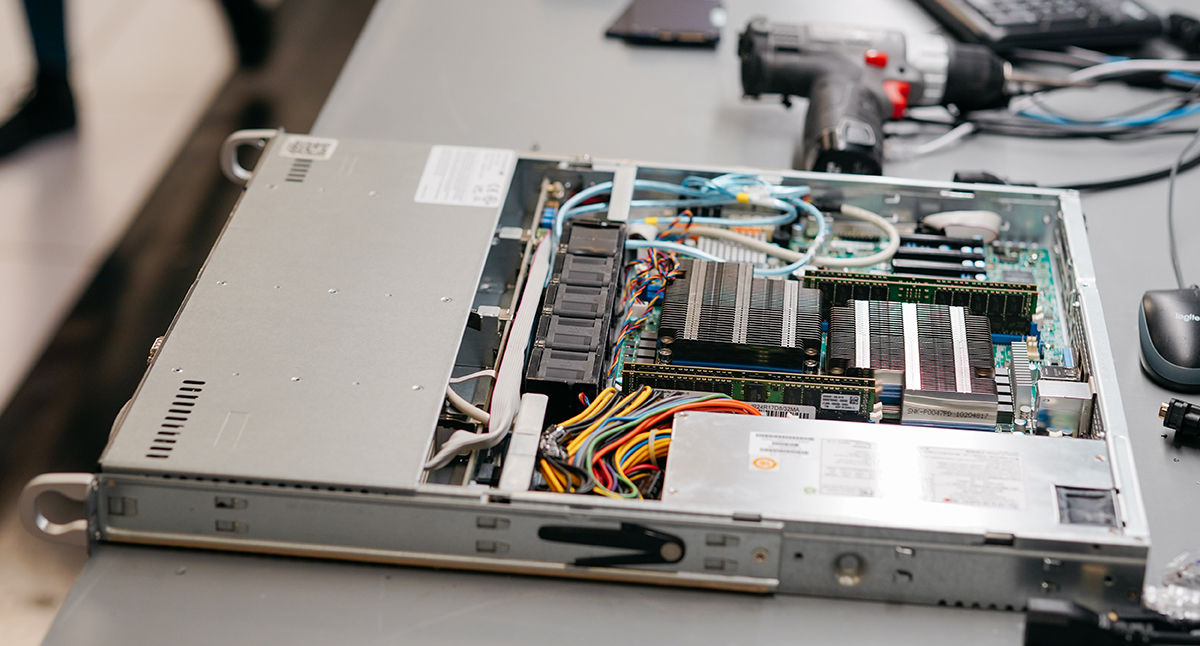

The CPU, is an essential part of every system from your home laptop to the servers hosting your company’s website.

Think of it this way: you, the user, are the CEO of a company. Your CPU is your middle management team. When something needs to be done, you don’t do it yourself, that’s not the job of the CEO. You make decisions and give instructions. You determine what needs to happen and your managers figure out the details. They crunch the numbers and determine which departments (the other components of your computer) need to do what, in order for the project to be handled most efficiently and effectively. They’re not doing it all themselves either, but they’re still overseeing the various pieces as they move throughout your different departments. This is what your CPU does. It makes things happen. Only much, much faster than this.

On a technical level, the reason your computer can process so many complicated functions so quickly and simultaneously is because of the way CPUs are built. At its core, a CPU is a chip installed into a socket on your system’s motherboard, which is jammed full of billions of tiny transistors. These transistors work like light switches, switching on or off in response to the 1s and 0s of incoming binary. At its simplest level, this is what allows your computer to do everything it does.

The reason these chips keep getting faster and faster though is because as our technology improves, our transistors get smaller and smaller. That means more fit into a single chip, and processing power improves.

So if a CPU is a chip full of tiny transistors, then what is a core?

What Is a Core?

When the earliest CPUs were developed, they were all single core. One chip, in a single socket, installed to the motherboard. As mentioned above though, processing technology keeps shrinking. This means we can fit more into a single chip. It also means we can fit multiple CPUs into a single socket. This is where we come to the definition of a core.

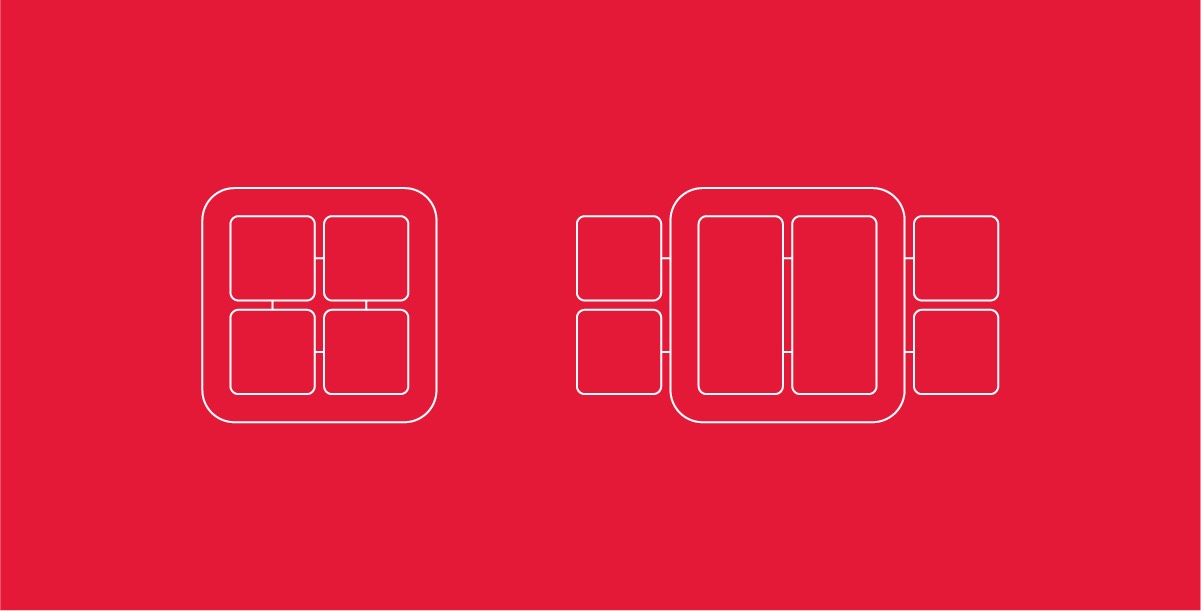

A Physical Core is a CPU contained on a chip, occupying a single socket. If you have a quad-core processor, you have 4 CPUs on a single chip. If you have an octo-core, you have 8. In other words, for each core your system possesses, it’s like having another brain to process commands with.

So, for each core in your computer, it can process a separate task at the same time. The more processes which can be accomplished simultaneously, the faster your overall completion rate. Now, cores are certainly not the only factor contributing to the speed of your system, but for machines tasked with resource intensive processes, such as AI, greater core counts can make a big difference.

For each core in your computer, it can process a separate task at the same time.

The thing is, not all cores are the same. As the technology used in our processors grows more powerful, it also grows more subtle in its distinctions. This is where determining the true processing power of your system can start to get a little tricky.

Threading? Virtual cores? Are these all semantics or are there differences here you should be aware of?

Let’s take a look.

Physical Cores vs. Logical Cores

To understand the difference between the various types and components of CPUs, you must first understand the difference between physical cores and logical cores. A physical core, or physical CPU, is an actual hardware component of the CPU chip, and is the same as those cores discussed above. Your home PC’s quad-core processor has 4 physical cores. Each core is the equivalent of its own CPU, but rather than having 4 separate chips installed into 4 separate sockets, the cores allow you to store them all on the same chip. These are the cores that determine your system’s true capabilities, establishing how many resources are available to devote to processing instructions.

And then, there are logical cores. A logical core, also known as a logical CPU or virtual core, is a concept used to describe the way operating systems view processors utilizing Simultaneous Multithreading (SMT). Multithreading, or more popularly Hyperthreading (which is Intel’s specific term for their simultaneous multithreading technology), is a process by which superscalar CPUs can split sets of instructions, known as Threads, allowing multiple processes to run on a single cycle.

If a CPU has two-way SMT, that means each core can run two threads at a time. A quad-core superscalar CPU with two-way SMT is said to be a 4 core / 8 thread CPU. Because this threading allows the system to process up to eight tasks simultaneously, the machine’s OS views the system as having 8 logical cores. In this way, the term logical core is mostly a means of conceptualizing this interaction between your system’s hardware when processing instructions utilizing multithreading.

But is a logical core really the same as a physical core or CPU?

What Is Simultaneous Multithreading?

Let’s go back to our middle management example. You, the CEO, relay a task to your team of managers, who divide and conquer, tackling the problem as efficiently as possible. One of these tasks requires your factory workers to assemble a series of two different mechanical parts, in quick succession, at high volume. You only have a single production line and only so many employees. With your available resources limited, the task can only be completed so quickly. After all, your core can only handle so much at once.

But let’s say you promote a second supervisor in this department. You still have the same number of employees, i.e. your system resources, however, now you have your department split into two teams with two leaders, each handling a separate task. These tasks represent your threads.

By threading a core, you’re making it more efficient.

In a non-multithreaded CPU, these tasks would have to be accomplished in succession, each one starting only after the previous task finishes completely.

With multithreading though, your machine is able to handle multiple tasks at once. They’re not really running simultaneously, so much as they are finishing in the same single cycle, working in and around each other’s schedules to finish all the tasks as quickly as possible.

So, what does this distinction look like?

Your two production teams are using the same line to accomplish two different objectives. Because the production line is a physical object, it’s only so big and can only be used by so many people at once. However the actual components each team is using, the parts of the whole, don’t entirely overlap.

Let’s say that as part of Team 1’s task, they have to wait on a component which is being provided by another department. This creates downtime where the production line isn’t in use, because Team 1 is unable to move forward without the part they’re waiting on. In a non-multithreaded environment, this delay in Task 1 would result in a delay for Task 2 as well. Your workers would have to wait for Task 1 to finish completely before they could even start Task 2.

In the multithreaded two-team version of this scenario though, Team 2 doesn’t actually have to wait. If Team 1 is held up and isn’t using the production line, Team 2 is able to jump in and complete their task while Team 1 is waiting for its missing components to come in.

In this scenario, you haven’t increased your overall available resources, but you’re using them more effectively. Your efficiency and speed increase. This is the purpose of threading.

Simultaneous Multithreading makes your processors faster by allowing them to process multiple lines of commands at once. Although the resource availability remains the same, the channels for processing instructions increase.

If a delay in thread 1’s task creates an opening in the pipeline, rather than sitting idle, a second, quicker thread can utilize those available resources and complete its task while thread 1 is still waiting. Essentially, by threading a core, you’re making it more efficient.

Now, in a perfect world, these threads would double the output of your cores, wouldn’t they? They’re twice as efficient, right?

While it’s true that multithreading allows your processors to handle greater volumes of complex commands, it is not the same as having twice the available physical cores. After all, even if your cores are more efficient, there will always be some degree of resource overlap. During these times, when a process demands the full resources of a designated core, whether it’s threaded or not will only make so much difference.

Just because a quad-core with multithreading is typically faster than 4 cores without, doesn’t mean it’s the same as a true octo-core processor.

But this isn’t to say that multithreading isn’t great. The increased logical processors threading yields undoubtedly makes your system more efficient. However, just because a quad-core with multithreading is typically faster than 4 cores without, doesn’t mean it’s the same as a true octo-core processor.

Does everyone need that level of processing power? No. But for those who do, this distinction makes a difference.

So, if a logical processor isn’t the same as a core, then what is vCPU?

What Is vCPU?

If you’re familiar with the term vCPU, chances are you or your company are clients of a public cloud provider, such as Amazon Web Services (AWS) or Google Cloud. vCPU, or Virtual CPU, is a term used by cloud IaaS providers to describe the computing power of the services they provide. We discuss this briefly in our analysis of the big three public cloud providers, in our article Is Your Cloud Too Expensive?

For example, the AWS c5d.2xlarge build we compared in that article, is listed as having 8 vCPU of processing power. However, these are not physical cores, these are virtual cores. As discussed previously, a virtual core is not the same as a physical core. Therefore, 8 vCPU is not a reflection of its physical hardware, but rather its logical processing power. What you’re really buying is just access to a fragment of that.

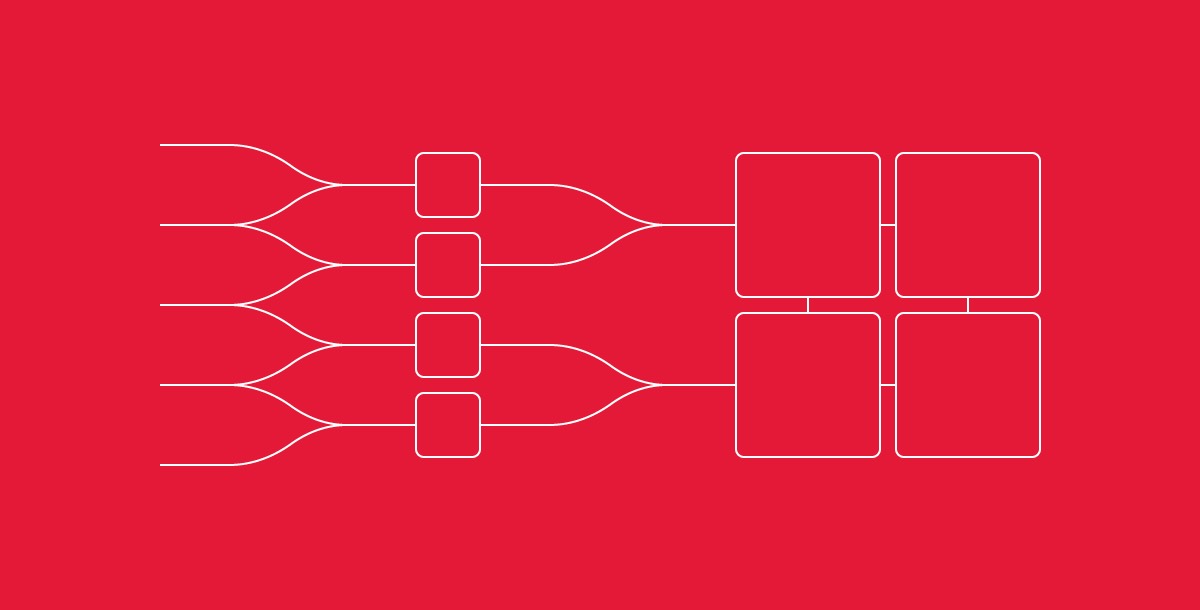

Through the use of hypervisors, public cloud providers like AWS split their servers into multiple virtual machines (VMs) which they sell to their users as separate instances. This can be done with or without SMT, but when the two are combined, the result is that a single physical core can be split into a multitude of different vCPUs. The usual formula for determining the maximum number of vCPUs a server can create before overselling its resources is: Threads x Cores x Sockets = Max vCPU.

So, if you have a 4 core server with two-way threading all on a single socket, theoretically, this server can be split into 32 vCPUs before creating a bottleneck (8threads x 4cores x 1socket). If you have a Dual e5 2620v4 server with two-way SMT, you’re looking at upwards of 256 vCPUs on a single server (16threads x 8cores x 2sockets). That’s a lot of VMs on a single machine.

The creation of vCPUs is basically a secondary version of SMT.

Another way of thinking about this is to look back at our production line metaphor. We’ll use our 4 core / 8 thread / 32 vCPU example for this.

So, let’s say that your factory has 4 production lines (cores) and you’ve split your employees into two teams per production line (multithreading). You now have 4 lines and 8 teams handling 8 separate tasks. But according to our formula, if utilized efficiently, it should be possible for upwards of 32 teams (vCPUs) to operate these 4 production lines, so long as their resource overlap remains limited.

Essentially, the hypervisor allows you to split these teams further, allowing for an even greater number of tasks to be tackled simultaneously. It does this by splitting the threads used by each VM between different cores, efficiently utilizing whichever pipeline is free at the time.

In other words, the creation of vCPUs is basically a secondary version of SMT.

If a server with 16 logical processors might be supporting upwards of 256 VMs at once though, can we really say that vCPU is equivalent to physical CPU? After all, while it’s unlikely all 256 of those instances will require access to those logical cores at the same time, it’s certainly not impossible to imagine that such a scenario could occur.

For this reason, when comparing the 8 vCPU AWS c5d.2xlarge unit mentioned above, we compare it not to a true octo-core server, but rather to one of our 4 core / 8 thread servers. This is because physical cores and logical cores are not identical. While most users would do fine with a processor like this, for those users who really do need 8 physical cores of processing power, just know that these two things are not the same.

The terminology used can, at times, be intentionally misleading. A vCPU is not the same as a core.

So Is vCPU Actually Equal to Physical CPU?

In short? No. That’s not to say that vCPU is necessarily inferior. A system with 8 vCPU, will likely run more efficiently than a system with 4. It’s just not the same as having 8 physical cores. The purpose of this analysis is not to discredit vCPU, but simply to make sure that this distinction between physical and logical processing is understood.

While increased logical processors can be a great asset, your physical cores, storage subsystem, and RAM are what mostly determine the true resource limitations of your system. When making infrastructure and hardware decisions, it’s important to know the role these subtle distinctions play. After all, in an industry so entrenched in terminology, it can be very easy to lose track of the differences between one keyword and another. Some providers use this to their advantage. If their customers don’t know the difference, it makes it easier to overpromise and underdeliver.

The terminology used can, at times, be intentionally misleading.

At Hivelocity though, we know a well-informed customer is a happy and successful customer. When our clients succeed, we succeed as well.

So if you’re curious about how a dedicated server or private cloud solution through Hivelocity can benefit you and your business, call or live chat with one of our sales agents today.

Whether you need 4 physical cores or 20, at Hivelocity, we offer instant and custom solutions designed to meet your needs. It’s all part of our commitment to bringing a more human touch to the hosting industry. Sign up today and take back control of your infrastructure.

Hivelocity is the hosting partner you can rely on — and there’s nothing virtual about that.

– Sean Kelly

Additional Links:

Looking for more information on CPUs or Cores? Search our Knowledge Base!

In need of more great content? Interested in cPanel, Private Cloud, or Edge Computing? Check out our recent posts for more news, guides, and industry insights!