With an increasing number of companies relying on containerization to organize their infrastructure and run their applications, chances are you already know that Kubernetes (K8s) is one of the most flexible and efficient container solutions available. But what about Kubernetes at “the Edge”? What even is the Edge? That’s the funny thing: ask 3 different people and you’ll get 3 different answers. Regardless, there is one thing all those answers will agree on: the Edge is about putting compute closer to your end users.

Hi, I’m Zach Kazanski, Sr. VP of Software at Hivelocity, The Bare Metal Cloud Company. At Hivelocity we make it easy to spin up K8s and other applications on dedicated single-tenant servers all around the world. With 35+ locations strategically positioned in critical global markets, our expansive network of data centers lets you put your servers where your customers are. If you need K8s in multiple different cities at the Edge, Hivelocity’s got you covered.

So then, what is the Edge? Read on to learn our answer to that question – this is our blog after all – as well as a technical dive into why and how you would want to run K8s there.

What Is the Edge?

First of all, the Edge is a location, not a thing. You don’t “use” the Edge, you go to it. You put things there. Usually servers. In the end, there are many “edges”, but the Edge we care about – the one that unlocks new functionality and market opportunity – is the last-mile network. More specifically, the portion of the network that physically reaches the end-users, such as fiber cables to homes and cell towers to smart-phones.

Why? Because the last mile’s bandwidth is the bottleneck to the data that can be delivered to and from the end user. [1]

Why Should You Care About the Edge?

TLDR: The speed and economics of the Edge enables applications and services to be created that were previously impossible.

Speed

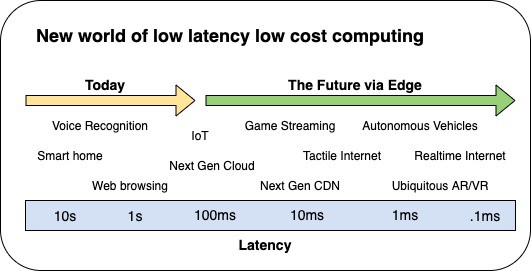

Connected devices, such as cellphones, can take 250ms for their data to make a roundtrip from the device to a centralized cloud data center. That’s good enough for some applications, but many services of the future – Autonomous Vehicles, AR/VR, Gaming, etc – require lower latency.

Would you trust a self-driving car that can’t make near-instant decisions? Would you play a game with consistent lag?

By moving compute to the Edge and closer to the users, transfer speeds of sub 20 milliseconds become possible, enabling the creation of previously impossible services and experiences.

Economics

By design, centralized clouds cost you a fortune. Their bandwidth pricing is intentionally high to create vendor lock-in, but this makes some data intensive applications – such as IoT – impossible due to the astronomical costs.

To improve the economics and enable more innovation, it is common to process data at the Edge rather than paying for the bandwidth and storage required to process the same data in the cloud. By 2025, Gartner predicts 75% of data will be processed outside of centralized clouds, doing real-time processing at the Edge and only sending key metrics back to the cloud.

What Does Edge Architecture Look Like?

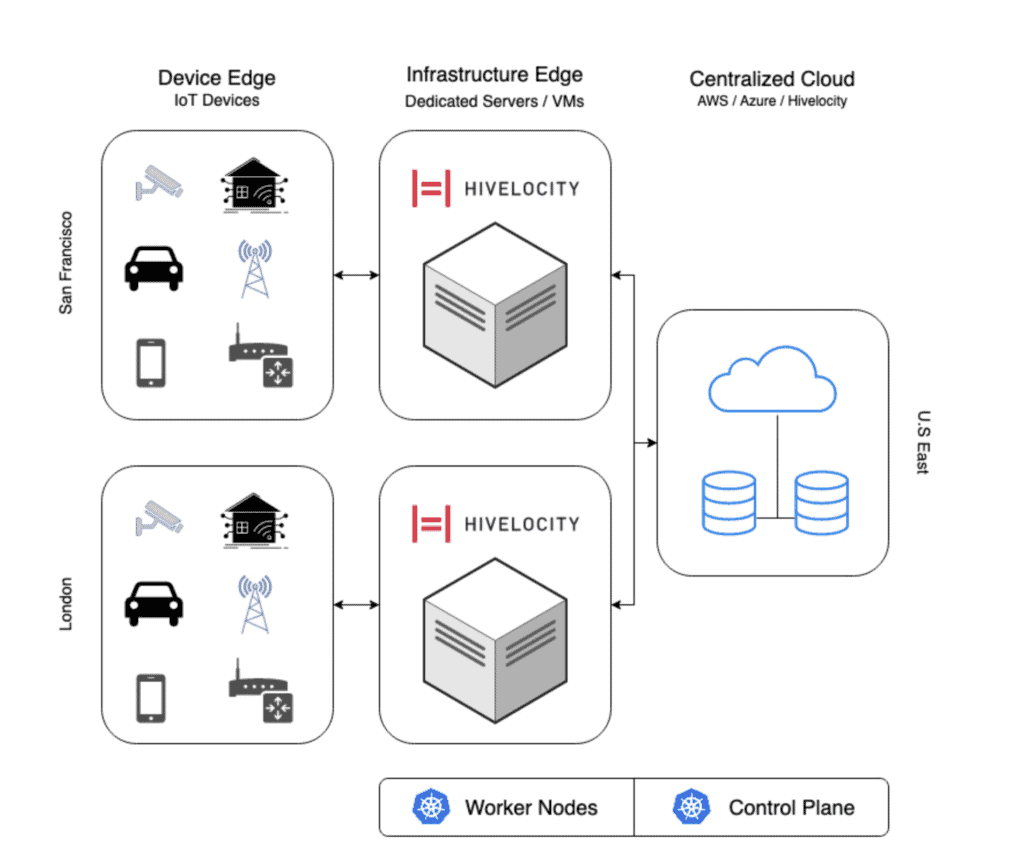

You can think of the Edge as having two sides: there is an infrastructure edge and a device edge, working together, then coordinating back to a centralized cloud.

The Device Edge consists of devices and services at the end of the last-mile network, things that the end user interacts with: smart-phones, wearables, vehicles, IoT aggregators, and other devices. To connect to the internet, these devices must utilize some form of last-mile network such as a cell tower.

The Infrastructure Edge consists of devices and services at the beginning of the last-mile network hosted in various aggregation hubs and regional data centers as close to the device edge as possible. These are the data centers that are closest to the end users.

Therefore, typical edge architecture is a combination of various devices at the Edge processing and sending data to the closest infrastructure edge for storage and further processing. The infrastructure edge compute will then send any aggregate info back to your central cloud, if you have one.

Below is a diagram of a traditional edge computing setup. K8s worker nodes are deployed in data centers close to where IoT devices are generating data. In the example below, these worker nodes are running in San Francisco and London. The nodes then communicate back to a K8s control plane which is running inside a centralized cloud provider in the U.S East region. A majority of the processing is done at the Infrastructure Edge (the local data centers), which only send aggregated data back to the centralized cloud.

Challenges of Using Kubernetes at the Edge

K8s is designed to work seamlessly across data centers. As a result, going from multi-region data centers to edge computing is a logical transition. Still, Kubernetes at the Edge does come with its challenges.

- Resources: Rarely do edge devices have the hardware resources for a complete base Kubernetes deployment as memory on edge devices is typically in short supply. Lightweight and edge Kubernetes distributions have been created to specifically address this challenge by replacing, and in some cases removing, functionality of the worker nodes.

- Offline-mode: Kubernetes by default doesn’t have great support to handle offline operation for devices. It assumes continuous HTTPS, TCP, and network access, meaning the K8s worker node agent (kubelet) can fail during outages of connectivity to the control plane.

- Protocol Support: Kubernetes lacks support for common IoT protocols, such as bluetooth or Modbus, that don’t use TCP/IP.

- Scalability: According to K8’s documentation, 5,000 nodes or 150,000 pods is the upper limit of scale, but edge deployments could constitute tens of thousands of nodes and millions of pods.

Light Kubernetes Distributions for the Edge

To address the challenges and resource problems outlined above, many open-source variations of Kubernetes have been developed to allow for greater functionality in edge applications. [2] These variations are sometimes called “light” K8s distros. Other edge-specific issues – such as offline-mode and protocol support – are addressed to varying degrees by some of these solutions but not others.

K3s

K3s, the most popular of the four Kubernetes alternatives with 20k+ Github stars, is a small, batteries-included Kubernetes distribution that makes it easy to run Kubernetes anywhere, not just at the Edge. As a sandbox-level CNCF project with a single 40MB binary that runs the complete Kubernetes API, K3s removes some of the dispensable features of Kubernetes to decrease deployment size. Additionally, Kubernetes manifests and Helm chart changes are automatically applied by K3s making your deployments easier to manage.

Of all the Kubernetes light alternatives, K3s is by far the easiest to get up and running on the most varied groups of hardware.

MicroK8s

MicroK8s, developed and maintained by Canonical, is a Kubernetes distribution designed to run fast, self-healing, and highly available Kubernetes clusters. While MicroK8s abstracts away much of the complexity of running a K8s cluster, it comes with a larger binary and footprint than similar solutions like K3s, as it installs some of the most widely used Kubernetes configuration, networking, and monitoring tools (Prometheus and Istio) by default. Unlike other options on this list though, MicroK8s has the limitation of being incompatible with ARM32 architectures.

If you are not deploying on ARM32 architectures, and are looking for a more standard K8s distribution, you may want to give MicroK8s a shot.

KubeEdge

KubeEdge is an incubation-level CNCF project specifically built to tackle the challenges in edge computing environments. Standard Kubernetes worker node agents need to maintain a target deployment state even during outages of connectivity to the control plane. KubeEdge solves this by replacing kubelet in base K8s with an agent of their own: edgelet. Edgelet provides the ability to connect back to the cloud via Websockets while enforcing a target state even during a loss of cloud connectivity. KubeEdge also provides various non TCP communication methods, such as bluetooth, all while requiring a mere 70MB of memory to run a node.

KubeEdge is a new yet compelling thin K8s deployment that is suitable for many edge scenarios as it was designed to overcome a number of edge specific challenges. If K3s is unfit for your scenario, KubeEdge will likely be what you need.

Openyurt

Openyurt is a sandbox-level CNCF project designed to meet various DevOps requirements against typical edge infrastructures, while providing the same user experience for managing edge applications as if they were running within the cloud infrastructure. It addresses specific cloud-edge orchestration challenges present in Kubernetes such as unreliable or disconnected cloud-edge networking, edge node autonomy, edge device management, region-aware deployment, and so on. Additionally, OpenYurt preserves intact Kubernetes API compatibility, is vendor agnostic, and more importantly, is simple to use.

SuperEdge

Another relatively new project worth mentioning is SuperEdge. Initiated by Tencent, Intel, VMware, Huya, Cambricon, Capital Online, and Meituan, SuperEdge is a CNCF incubation-level project similar to KubeEdge but with a few key differences. To quote the maintainers, what sets SuperEdge apart is that it is not intrusive to Kubernetes source code, so users don’t need to consider compatibility with Kubernetes version and ecology. In other words, SuperEdge doesn’t replace kubelet. It also supports network tunneling using TCP, HTTP, HTTPS, and SSH.

Getting Started with K8s at the Edge

So now that we’ve answered some questions about Kubernetes and its role in edge infrastructure, the question becomes this: are you ready to get started with K8s at the Edge? Whether you know exactly what you’re looking for or need help choosing hardware, software, and locations for your infrastructure edge, Hivelocity’s team of solutions architects are happy to sit down with you and help you plan your next deployment.

So, if you think Kubernetes at the Edge might be exactly what your organization needs to achieve its fullest growth potential, then give Hivelocity a try. We’ll get you up and running quickly in one or more of our 35+ data centers around the world. Take your compute to the Edge with Hivelocity.

Open a chat or call one of our sales agents to get started.

Looking for more information on Kubenetes at the Edge? Watch this webinar, Kubernetes on the Edge, featuring Hivelocity Operations Director, Richard Nicholas, alongside Ivan Fetch of Fairwinds and Mike Vizard of Techstrong Group, as they discuss all things K8s at the Edge. Registration for the on-demand recording is free, so be sure to check it out! It’s an interesting and informative discussion.

To view the webinar, please visit: https://webinars.containerjournal.com/kubernetes-on-the-edge

Footnotes:

[1] We agree with the definition of the edge as stated in the Linux Foundation’s State of the Edge report.

[2] There are other closed source alternatives such as IBM Edge Application Manager (IEAM).